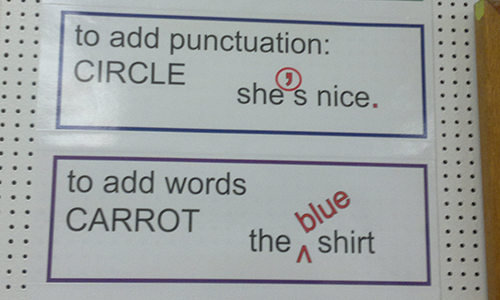

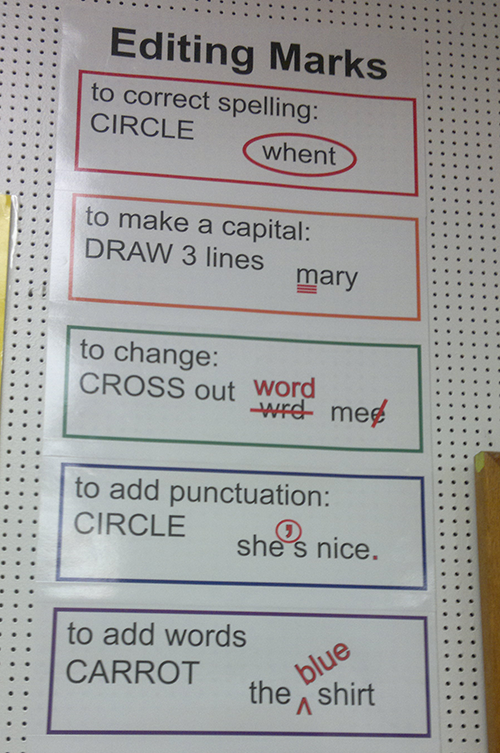

Among the helpful editing marks posted in a fifth-grade classroom in New York is the caret, described here as a “carrot.” Photo: Vincent Lima

My older child’s fifth-grade teacher had a list of proofreading marks posted in class. Among them was the symbol ^, which the teacher had labeled a “carrot.” She was an amazing teacher, so who cares?

None of us is perfect, and we don’t expect teachers to have complete mastery of language, math, science, and every other field, alongside pedagogy. In elementary school, teaching the kid who sits next to mine to cover his mouth and nose when sneezing strikes me as more important than knowing the standard spelling of caret.

But there’s a line.

What makes a great teacher is perhaps impossible to capture in a teacher-licensing test. But we can capture the extent of knowledge in some important areas and draw that line between adequate and inadequate knowledge.

Problems in North Carolina

The Charlotte Observer on August 1 reported, “Hundreds of NC teachers are flunking math exams. It may not be their fault.” The article, by Ann Doss Helms, raises interesting questions about how the state board of education is drawing that line in North Carolina. More broadly, it brings up important points about the validity of tests for licensing and certification purposes.

North Carolina requires teachers to have “completed a state approved teacher education program” and to pass a licensing test. Though Praxis II results (from ETS) are still accepted, the article reports that the state switched three years ago to a test prepared and administered for North Carolina by Pearson. The test has two subtests, one of which covers math.

Whereas Praxis passing rates were around 86 percent between 2011 and 2014, the Pearson passing rate for math was as low as 55 percent in 2016–17, according to a report presented to the state Board of Education.1

Alarmed, the state “has named a committee of experts to review whether the Pearson test is aligned with the state’s K-8 curriculum and look at better alternatives,” Ms. Doss Helms writes.

(Of course, it could be that the new teachers are less knowledgeable, on the whole, than past candidates for licensure; perhaps the teacher-education programs have fallen apart, or the best aspiring teachers are flocking to another state—or another profession. Or some combination of these factors. But the most obvious explanation for the plummeting passing rate does seem to be the change in licensing test. So it makes perfect sense to look there first.)

Wait, what?

The fact that the state felt a need to look into the question of alignment retroactively is curious.

Standard practice for any professional credentialing exam is to start with alignment, and think about alignment at every step. If you’re not doing that, you’re not doing credentialing right.2

What does “alignment” look like for teachers?

The best practice is to have a representative group of the actual teachers from the jurisdiction come together, alongside other stakeholders like principals, parents, perhaps students, and board of education members. Some teachers would be urban, some suburban, some rural; some would teach kindergarten, some eighth grade; some would be new teachers, some very experienced. Together, the stakeholders would figure out what knowledge teachers regularly use in their professional lives.

In the North Carolina case, expected math knowledge is listed in excellent detail. One of the “objectives” in the document is

- Understand and apply principles of number theory.

Several example are listed. Among them are

-

- Find the prime factorization of a number and recognize its uses.

- Apply the least common multiple (LCM) and greatest common factor (GCF) in real-world situations.

A good question for Ms. Doss Helms to ask the state board and Pearson is whether this list was developed in consultation with the stakeholders listed.

Validating knowledge expectations

The next best practice is to validate this outline by surveying a larger, representative group of teachers from the jurisdiction.

The North Carolina outline includes this example under the objective, “Understanding linear functions and linear equations”:

-

- Distinguish between linear and nonlinear functions.

I’d speculate that in a survey, teachers who teach kindergarten through fifth grade might report that they never need to use that knowledge in their work, whereas eighth-grade teachers might report using it sometimes. Policy makers might then decide to exclude that knowledge from the licensing test, or make it count for only a small fraction of the score. They might even decide to develop different tests for teachers in elementary and middle schools.

A second question for the state board, then, is whether the knowledge outline used for the test was validated by survey.

Who writes questions?

The third best practice is to have teachers write the questions on the test, faithful to the approved outline.

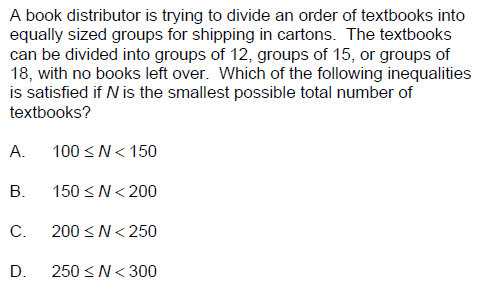

A sample item from the NC General Curriculum Mathematics Subtest © 2013, Pearson

In the North Carolina case, a sample test includes the following item, which appears to correspond to the expectation that candidates are able to “apply the least common multiple (LCM) and greatest common factor (GCF) in real-world situations.”

A book distributor is trying to divide an order of textbooks into equally sized groups for shipping in cartons. The textbooks can be divided into groups of 12, groups of 15, or groups of 18, with no books left over.

The item goes on to ask for the approximate “smallest possible total number of textbooks.”

Admittedly, in a “real-world situation,” we’d usually know how many textbooks we were trying to ship before knowing how many equally sized groups we could divide them into. All in all, though, if a North Carolina teacher needs to know how to apply LMC and GCF in real-world situations, then this is a reasonable way of finding out whether he or she does.

One advantage of hiring an oversize firm like Pearson to administer your tests, presumably, is that they have lots of questions up their sleeve, and you can spare yourself the expense of having teachers write these questions. Still, I would want a group of teachers from the jurisdiction at least to review the questions to confirm relevance.

Thus, for example, in an item on the sample math test, a supplier “charged 5% sales tax.” In North Carolina, sales tax varies by jurisdiction; the base state rate is 4.75%, so this question is probably okay. In New Hampshire, which has no sales tax, teachers may prefer a different version of the question. (Maybe the supplier gave a 5% discount?)

A third question, then, for the state board—and Pearson—is whether North Carolina teachers are involved in writing and reviewing items on the test.

Drawing the line

Finally, standard practice is for the stakeholders to determine the passing standard. Given a set of items, what score must an aspiring teacher achieve to pass the test?

This is the line that we started with.

Where to draw the line is a judgment call. The people best qualified to make that judgment are the stakeholders we have been talking about: the teachers, along with the principals and parents. The final decision, presumably, belongs to the state board of education, which would also take into consideration the impact of the standard.

According to testing standards, the impact of the standard—the passing rate—should not be a consideration in setting the passing standard.3 However, unless the board is inundated with complaints about teachers lacking knowledge and skills, it can reasonably reject as too stringent a standard that would bring about a big drop in the passing rate and deprive thousands of aspiring teachers of work.

In North Carolina, do educators recommend the passing standard and does the board determine it?

A response from the publisher

As it happens, we do have an answer to that question. Whereas the original Charlotte Observer story had no comment from Pearson, an update on August 2 included a Pearson statement that reads, in relevant part, “Test scores required for passing are determined by the State and are informed by recommendations from North Carolina educators resulting from standard setting activities.”

It appears, then, that the state itself set the passing standard!

Thus, perhaps more detailed questions need to be asked: Was the state board given access to the projected impact of the standard on passing rates? Did it, therefore, expect the passing rate to plummet? Or was it given estimates that turned out to be wildly inaccurate? If the estimates were so far off, how does Pearson explain the discrepancy?

While these are questions for North Carolina, the key takeaway for the rest of us is to make sure we have full stakeholder involvement in aligning our tests with the profession for which they serve as a gateway. We don’t want to have to search for that alignment only after the fact.

Work Cited

American Educational Research Association, American Psychological Association, National Council on Measurement in Education. (2014). Standards for Educational and Psychological Testing. Washington, D.C.: American Educational Research Association.

1. The report was by Thomas Tomberlin, director of district human resources at the state Department of Public Instruction, and Andrew Sioberg, the department’s director of educator preparation.↩

2. Standard 11.13 of the Standards for Educational and Psychological Testing reads, “The content domain to be covered by a credentialing test should be defined clearly and justified in terms of the importance of the content for credential-worthy performance in an occupation or profession. A rationale or evidence should be provided to support the claim that the knowledge or skills being assessed are required for credential-worthy performance in that occupation and are consistent with the purpose for which the credentialing program was instituted.” (American Educational Research Association, American Psychological Association, National Council on Measurement in Education, 2014)↩

3. Standard 11.16 of the Standards for Educational and Psychological Testing reads, “The level of performance required for passing a credentialing test should depend on the knowledge and skills required for credential-worthy performance in the occupation or profession and should not be adjusted to control the number or proportion of persons passing the test.” (American Educational Research Association, American Psychological Association, National Council on Measurement in Education, 2014)↩